Keeping the Future Honest: Why Open AI Matters

True openness in AI is essential to counter both inherited historical bias and emerging new forms of distortion: it demands public commitment and civic stewardship similar to the public library movement

The AI we have today is inherently biased. This is not because someone sat down in real time and decided to make it unfair. It is because the people who wrote the books, did the science, presented the news, and populated the early internet were mainly men from mainly the large, English-speaking economies, which were mainly white and Christian. A second layer arises from present-day design choices — what data gets included or excluded, how it is labelled, and what objectives the models are built to optimise. These observations are simply a restatement of an old truth: history is written by the winners. The written record—what we now call data—reflects their point of view.

We know this and we are trying to correct it. There is a great deal to catch up on before the other histories and perspectives of the world are properly represented. The gradual digitisation of life, and the numerical strength of younger populations from developing regions, will hopefully rebalance this over time. In the meantime, we can intervene directly: AI can already be used to analyse its own output against relevant criteria to expose bias and accelerate correction. That helps us balance the past.

History, however, is not the only source of distortion. Correcting the past is possible; protecting the future is harder. Just because we can counter the biases of history does not mean we are safe from new ones. The winners of today and tomorrow are likely to be the United States, China, and large corporations. Few of these actors fully favour open systems or unrestricted speech. States seek to preserve their political order and cultural self-image; commerce pursues profit and, in doing so, values advantage over fairness. The major technology firms have repeatedly sought to limit competition and to retain maximum freedom to manipulate rather than serve—pushing the limits of regulation until forced to stop.

State actors and corporations will therefore introduce new biases, both by amplifying information they prefer and by erecting legal or technical firewalls around what they do not. Much of what passes for “open AI” today is open for strategic reasons, not ethical ones. Early openness helped attract users, gather data, and build dominance before the gates began to close. The companies and their investors need users and data to strengthen their models and maximise advantage before imposing the inevitable paywalls, filters, and censorship. As paywalls rise and content moderation expands, access and information control will increasingly merge, making it harder to retrieve unfiltered material even with skilful prompting.

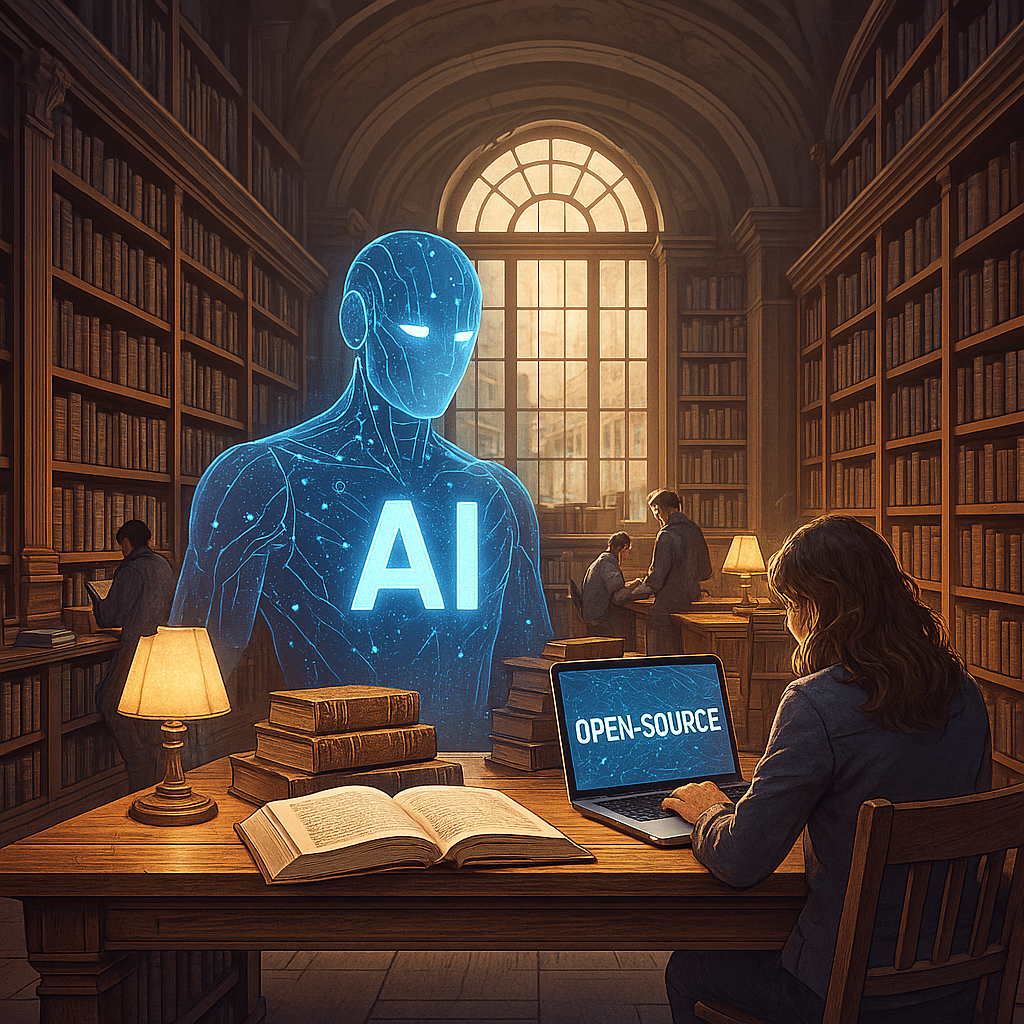

An open-source AI must therefore exist alongside every commercial and state-backed version. Such a system will need sustained funding to remain technically competitive, an organisational backbone, and expert stewardship. It will need philanthropists to extend their end-of-career generosity toward healthy information ecosystems, not just healthy bodies. It probably also needs the legal protection — perhaps from the European Union or a similar public institution —willing to act as guardian of something it cannot control but must defend.

There is precedent for this. Public libraries were once a radical idea: access to knowledge should not depend on wealth or geography. They turned private learning into a public good and became one of the quiet revolutions of the twentieth century. The idea now looks almost quaint, yet it underpinned the spread of literacy, scientific progress, and civic participation. Libraries made citizens out of readers. In the same spirit, open AI deserves the same civic commitment that once built public libraries. Libraries safeguarded knowledge; open AI must both protect and create it. Just as libraries only achieved their full impact when censorship eased, so too will open AI need that same freedom to realise its purpose. Whatever the model, true openness brings risks—misuse, misinformation, and malicious use among them—but these are problems to be managed, not reasons for closure.

So enjoy AI as it is, be aware of where its biases come from—past and future—and support a truly open AI however you can. Bias can be managed only if it remains visible.