Outrunning Ourselves: AI, Evolution, and the Urgency of Human-Centred Design

Designing AI for Humans, Not in Spite of Them

We like to think of evolution as something settled—a process that happened, not one that is still unfolding. But human evolution isn’t just about bones and genes. It encompasses our bodies, our brains, and the full range of emotional and social conditioning that shape human experience. All of it evolves incredibly slowly—glacially, from the point of view of a single lifetime. For most of human history, that wasn’t a problem. Then this busy, restless social species began changing the conditions of life faster than evolution typically works.

Even before AI, there were already clear examples—quiet, physical, and widely misunderstood—of how our biology struggles to keep pace. Milk, food, and lifespan are good examples.

I didn’t realise until recently that the ability to digest milk into adulthood is a genetic anomaly that emerged in a few dairy-farming populations around ten thousand years ago. Today, about a third of adults worldwide carry that trait. It’s not the human default. It’s a hidden adaptation to a cultural shift—one that most people don’t even realise is an evolutionary story. Or take our jaws. As our diets shifted from coarse, raw food to soft, processed meals, our jawbones shrank—but our teeth didn’t. Now many people’s mouths are too small to accommodate their teeth, and dentists make a good living removing wisdom teeth. These are not cosmetic issues. They are the physical signs of a lag between how the body evolves and the changes we have, quite wonderfully, made to improve life.

Life expectancy tells a similar story, though on a much grander scale. For almost 300,000 years—99% of our time as Homo sapiens—the average human lifespan was around 30 years. Then, in the space of just two centuries, it doubled. By 1900, life expectancy in many countries was still under 40. By 1950 it had risen to 50. Today, it exceeds 70 globally and 80 in wealthier nations. This isn’t just more of the same—it introduces entirely new stages of human experience. People now live decades beyond the period of child-rearing, with entire adult phases—multiple careers, relationships, losses, and reinventions—that have no real social precedent. We are improvising lives our ancestors never lived long enough to imagine. While we’ve extended the body’s capacity to persist, we haven’t matched it with the emotional or cultural infrastructure needed to carry the weight of so much additional time.

These aren’t random anomalies. They are signs of a growing mismatch between the slow pace of biological and emotional evolution and the accelerating complexity of the environments we are now designing.

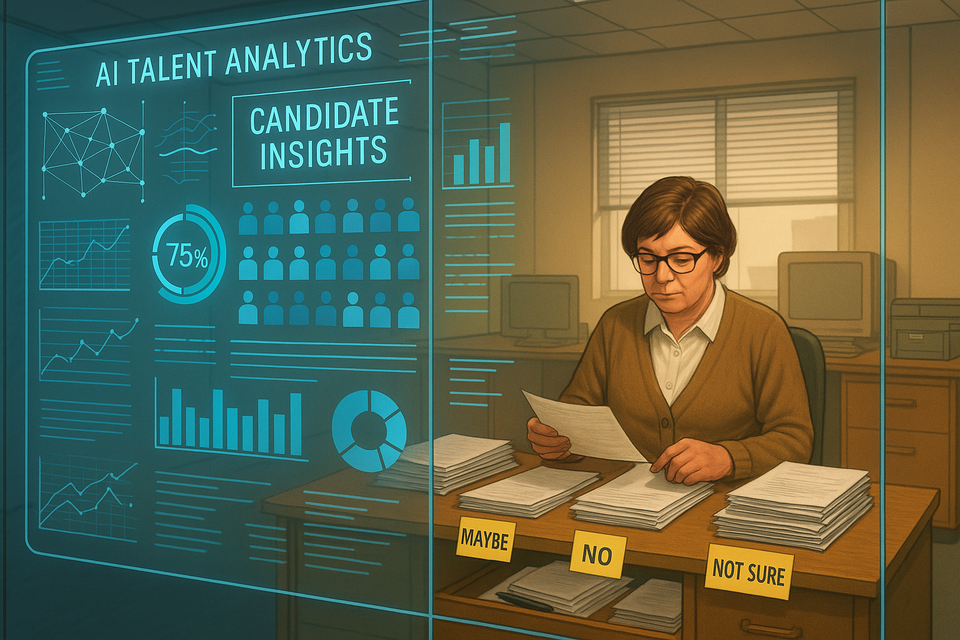

Artificial intelligence will sharpen this disconnect. It is not just a faster machine or a better calculator; it operates in domains we once thought uniquely human—language, judgement, synthesis. In less than a generation, we’ve moved from basic pattern-matching to systems that can generate language, simulate reasoning, and influence decisions that affect real lives. AI won’t be constrained by attention span, emotional context, or the need for rest. It will operate continuously, scale instantly, and shape outcomes across entire systems—in education, hiring, finance, and healthcare.

Neither genetics nor dentists will solve the deeper impact AI is likely to have on what it means to be human. This is not a question of whether machines are smarter than us. When AI begins setting the tempo for how knowledge is produced, distributed, and valued, we won’t just face technological disruption. We will face a kind of existential distortion. The tools that were meant to serve us begin to reshape us—not deliberately, but structurally. Alongside their benefits, AI systems are likely to make many of us feel inadequate, disoriented, or obsolete.

In this context, the tension many people already feel—disconnection, exhaustion, the difficulty of sustaining attention or meaningful relationships—should be central to the story of AI. These emotions are not signs of weakness or failure. They are the legitimate human response to being asked to function inside systems that no longer reflect what we are. They are not the problem. They are the evidence of a deeper misalignment—and the strongest signal we have that something must be done before these systems solidify into default realities.

Human-centred AI design is no longer a matter of ethics alone. It is now a condition for coherence. How foolish it would be to wait until the full weight of dislocation is upon us, when all we could do is manage the damage. Better to act now—while we still have a chance to shape AI in ways that reflect the slow, embodied, emotionally layered nature of human life. That means building in limits, friction, intervals of rest and doubt. It means recognising that emotional bandwidth and cognitive overload are not inefficiencies to be optimised away, but facts of the organism we are.

If we succeed in designing systems that take this seriously—not in theory, but in architecture—then the discomfort many feel today might prove useful. It might turn out to be not just understandable, but necessary: the pressure that forced us to build technology to serve the human animal, not to bypass it.